Feb 20, 2026

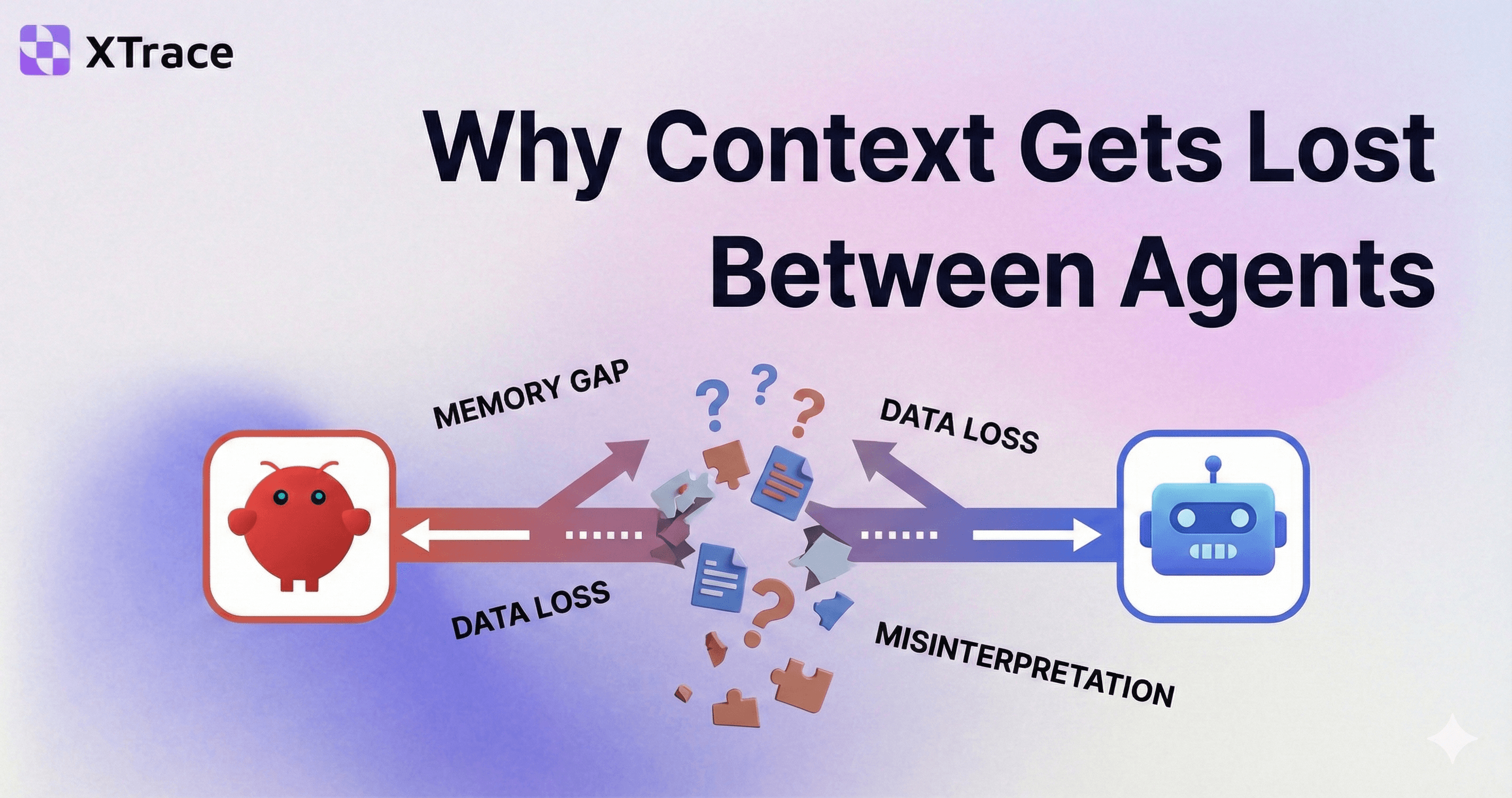

AI agents lose decisions, evidence, and reasoning every time they hand off context. Here's why structured memory, not summaries, is what multi-agent workflows actually need.

Memory

AI Agent

Every agent framework has a handoff mechanism. They all solve the same problem: Agent A finishes, Agent B starts, and something gets thrown over the wall. The thing that gets thrown over the wall is almost always wrong.

The context dump fallacy

Here's what agent handoff looks like today. A research agent spends 45 minutes analyzing competitors, reading documents, extracting pricing data, comparing feature sets. It produces a detailed analysis. Then it "hands off" to a strategy agent.

What does the strategy agent actually receive? One of two things:

Everything. The full message history, every search query, every dead end, every intermediate thought. The strategy agent wades through 40,000 tokens of process to find the 3,000 tokens of insight. It's the equivalent of handing a new employee every email you sent last month and saying "good luck."

A summary. The research agent compresses 45 minutes of work into a paragraph. The strategy agent gets the headline, but none of the supporting evidence. When it needs to verify a claim ("you said Paddle handles EU VAT automatically, what's your source?"), the answer is gone. It was compressed away.

Neither option works. One drowns the receiving agent in noise. The other strips away the evidence. And both share a deeper flaw: they treat handoff as a one-time data transfer, a blob of text thrown from one agent to another.

It's not just agents. It's everywhere.

The agent-to-agent case is the most obvious, but the same pattern shows up everywhere people use AI:

Switching tools. You spend an hour refining a blog post in ChatGPT. You switch to Claude to tighten the intro. Claude has no idea what you're talking about.

Resuming work. You start a project plan on Monday. By Friday, the session is gone. You open a new chat: "Remember the project plan we worked on?" It doesn't.

Team handoffs. A PM builds a PRD over three sessions. An engineer opens a new session to write the technical design. The decisions, the constraints, the reasoning — none of it carries over.

Long-running workflows. An agent researches, another drafts, another reviews. Each starts cold. The review agent doesn't have the research that informed the draft.

Every one of these is a handoff problem. And every one is currently "solved" by either dumping everything or summarizing it away.

Why summaries aren't the answer

Every AI tool already has some version of context compression. Claude Code has autocompact. ChatGPT compresses old messages. Most agent frameworks truncate history when the context window fills up.

You might ask: how is handoff any different from summarization?

If the handoff is just "summarize this session and paste it into the next agent's system prompt," it isn't different. It's autocompact with extra steps. And it loses information the same way: silently, unpredictably, with no way for the receiving agent to ask follow-up questions about what was compressed away.

The difference only exists if you change what gets handed off. Not a text blob, but structured knowledge the receiving agent can query.

What good handoff actually looks like: briefings, not data dumps

Think about how real teams work. When someone joins a project mid-stream, nobody hands them a transcript of every meeting. And nobody gives them a one-paragraph summary. They get a briefing: structured, prioritized, queryable.

A good briefing has layers:

Decisions. The non-negotiable constraints. "We're building in-house because third-party integration doesn't support our data model." If the new person doesn't know these, they'll confidently make the wrong call.

Artifacts. The actual deliverables, the analysis, the architecture doc. Not summaries. The real documents, because someone might need to reference specific sections.

Preferences and patterns. Context that accumulated along the way. "The CEO wants bullet points, not prose." "We tried microservices and rolled it back."

Timeline. What was done, when, and by whom. The least urgent layer, but it gives narrative coherence.

This is what agent handoff should look like. Not a context dump. Not a summary. A structured briefing backed by queryable memory.

How structured memory changes agent handoff

When agents share structured, queryable memory instead of passing text blobs, several things change at once:

No more cold starts. Every agent in a workflow starts with full context, not because it received a dump, but because it can query the accumulated knowledge of every agent that came before it.

No more telephone games. The fifth agent in a chain has the same access to the original research as the first. Context doesn't degrade through successive summarization.

No more lost decisions. When Agent A decides "focus on enterprise" and Agent C needs to know why, the reasoning is there, linked to the decision, traceable to the evidence.

Cross-tool continuity. The same memory layer that connects agents in a pipeline also connects a person switching between ChatGPT and Claude. Whether the "new agent" is a strategy bot or a human opening a new chat window, the briefing works the same way.

And critically, the memory outlives any single workflow. Three months later, when the team revisits the strategy, the next agent doesn't start from zero. The research, the decisions, the competitive data — it's all still there. Context doesn't just transfer between agents. It compounds with every interaction.

How XTrace solves this

XTrace is the context layer for AI. It sits between your agents, tools, and workflows as a private, portable memory layer that belongs to you.

Instead of passing text between agents, XTrace captures what matters — decisions, artifacts, facts, and preferences — as structured, typed objects in a shared memory layer. When a new agent joins a workflow, it doesn't receive a dump. It queries the memory for a briefing: "What do I need to know to write a GTM strategy?" The system retrieves the relevant artifacts, decisions, and facts, prioritized by relevance.

Because XTrace memory is portable and user-owned, it works across tools, across agents, and across time. Your context isn't locked inside any single vendor's walls. It travels with you.

Looking forward: from handoff to shared understanding

The industry is building agents that can reason, plan, and execute. But every pipeline is only as good as its weakest handoff. And right now, every handoff is either a flood or a trickle — too much context or too little.

What's needed is a layer that sits between agents, not passing messages, but building shared understanding. Agents that don't just hand off context, but share memory.

That's what turns a chain of isolated agents into a team.

Frequently asked questions

What is agent handoff in AI?

Agent handoff is the process of transferring context, state, and responsibility from one AI agent to another during a multi-agent workflow. Most frameworks handle this by passing message histories or summaries, but both approaches lose critical information. Effective handoff requires structured, queryable memory rather than raw text transfer.

Why do AI agents lose context during handoff?

AI agents lose context because current handoff mechanisms treat context as a one-time data transfer. Either the full message history is passed (overwhelming the receiving agent with noise) or it's summarized (stripping away evidence and reasoning). Neither approach preserves the structured relationships between decisions, artifacts, and facts that the receiving agent needs.

What is the difference between context window and memory for AI agents?

A context window holds immediate information within a single session but disappears when the session ends. Memory persists across sessions, tools, and agents. Context windows help agents stay consistent within a conversation; memory allows agents to be intelligent across conversations. Even with context windows reaching 100K+ tokens, they lack the persistence, prioritization, and structure that real agent workflows require.

How does structured memory improve multi-agent workflows?

Structured memory stores decisions, artifacts, facts, and preferences as typed, linked objects rather than raw text. This means receiving agents can query specific information ("what was the competitor's conversion rate?") and get precise answers with provenance, rather than searching through a text dump or relying on a lossy summary. Context accumulates across the workflow instead of degrading at each handoff.

© 2026 XTrace. All rights reserved.